Academic Experience

How to Ask Sensitive Survey Questions

Updated September 27, 2021

Ideally, we could avoid asking respondents about sensitive topics that may make them feel embarrassed or uncomfortable. In certain situations, though, asking sensitive questions is necessary to get the insights we need. For example, almost all election studies ask respondents about voting behavior, which can be sensitive – especially when respondents did not vote.

Improve your surveys with our eBook: 7 Tips for Writing Great Questions

What is considered a sensitive question?

There are lots of ways that a question can be sensitive, and this may affect the strategies you use to encourage accurate reporting. Examples of sensitive topics include:

- Illegal behaviors (drug use or committing a crime)

- Anything that poses a threat or risk if disclosed to the wrong party (cheating or identification of a pre-existing health condition)

- Invasion of privacy (income, location information)

- Emotionally upsetting (victimization or detailing chronic health problems)

- Endorsement of unpopular behaviors or attitudes (abortion, racism)

- Questions with socially desirable responses (voting, wearing a seatbelt, exercising regularly)

Other factors can also affect whether surveys are considered sensitive. This includes the perceived privacy or confidentiality of the survey, which is influenced by the presence of an interviewer or others, the survey mode (e.g, web versus in-person), and legitimacy of the organization requesting information.

Low survey response rates? Learn how to increase them with our eBook

What are some of the potential consequences of asking sensitive questions?

There are three primary consequences of asking sensitive questions, all of which can affect the quality of your data (Tourangeau and Yan,2007):

- People refuse to even start your survey (if the topic itself is sensitive) or they break off and do not finish the survey once they reach the sensitive questions

- Respondents agree to complete the survey, but they leave the sensitive

questions blank or answer “Don’t Know” - Respondents provide inaccurate responses – that is they overreport socially

desirable behaviors and under report socially undesirable behaviors

What can we do to collect better data when asking sensitive questions?

Below we identify 11 strategies for asking sensitive questions in surveys to improve the quality of data collected. Be sure to keep in mind: if you don't actually need sensitive data, it is best to avoid these kinds of questions altogether.

1. Use a self-administered mode such as web or mail surveys

One of the most effective strategies is to use a self-administered mode such as the web or mail instead of an interviewer-administered mode such as telephone or in-person to. Not surprisingly, it is much easier for people to admit to less-than-desirable behavior when there is no interviewer around to judge them.

A meta-analysis by Tourangeau and Yan (2007) compared seven studies that experimentally compared asking about illicit drug use in an interviewer-administered mode with a self-administered mode. The median increase in illicit drug use was 30% when self-administered modes were used!

2. Assure confidentiality

Describe in the survey invitation or survey introduction what steps are being taken to ensure confidentiality and to protect responses. For example, “Your responses are confidential. We will never associate your name with any of your survey responses. Individual responses will be combined together with those of other respondents and reported as a group.”

Be careful not to say that your survey is anonymous unless it really is. Anonymous means you are not tracking who completes the survey and not collecting any personally identifiable data that could later be used to identify someone. For example, if you are a small company doing a survey of your employees and you only have one Hispanic female employee over age 50, your survey will not be anonymous if you also ask for respondents’ ethnicity, gender, and age.

3, Explain why you are asking this question

If respondents just think we are being nosy, they will be less inclined to answer our questions. If we can adequately explain why we want to know this information (and how it will be used), respondents will be more willing to answer the questions and answer them honestly. This can be done before asking the question or as a prompt that appears if respondents skip a question.

For example, before asking a detailed series of financial questions, a survey might include the following information, “We ask about income and housing costs to understand whether housing is affordable in local communities.”

4. Put your demographic questions at the end

Sharing intimate details about yourself (via answers to demographic questions) at the beginning of a survey reduces the perceived confidentiality of the survey. This may make people more reluctant to answer sensitive questions honestly. It is better to save the demographic questions for the end.

5. Present sensitive questions toward the end (but not the very end)

If your survey only has a few sensitive questions, place those toward the end (but before the demographics). Starting a survey and immediately answering sensitive questions can be jarring for respondents. It is best to build trust by asking less sensitive questions first. Once you have established rapport, then ask the sensitive questions.

6. Use ranges rather than asking for a specific numeric value

Although collecting continuous numeric data is often easier to analyze, asking respondents to select an applicable range is less sensitive. For example, instead of asking for date of birth, consider whether collecting an age range will be sufficient (e.g., 18 to 25, 26 to 49, 50 to 64, 65 and older). Similarly, respondents will be more willing to report an income range rather than their specific income amount. The larger the ranges, the less sensitive the request.

A strategy that is particularly useful for asking income, which can have missing data rates as high as 30%, is unfolding brackets. Unfolding brackets are a series of branching questions that iterate toward a specific range. For example, you first ask respondents if their annual income is more or less than a certain amount, such as $20,000. Then ask a series of similarly structured dichotomous questions until a reasonable range can be determined. The downside, of course, is that this requires more questions which can increase burden.

7. Use “Question Loading” strategies to normalize the behavior and counter the bias

Let’s say you need to ask respondents if they voted in the last election. Consider the following two versions of a question:

(a) “Did you vote in the last election?”

(b) “There are many reasons why people don’t get a chance to vote. Did you happen to vote in the last election?”

“Good citizens” vote, right? It means you are informed and that you care. However, it is also possible someone is informed and cares but could not vote for a good reason – they were sick, their car broke down, they could not get out of work. Version b of the question acknowledges that there are many reasons why someone would (or would not) do a particular behavior and removes some of the judgment associated with providing a socially undesirable response.

Question loading can be brief, such as simply saying “there are many reasons,” or it can be more complex:

(c) “In talking to people about elections, we find that a lot of people were not able to vote because they weren’t registered, they were sick, or they just didn’t have the time. How about you? Did you vote in the election this November?”

It may help to test out a few versions to determine what level of question loading works best without complicating the question or introducing other biases.

8. Ask a direct frequency question rather than using a yes/no filter.

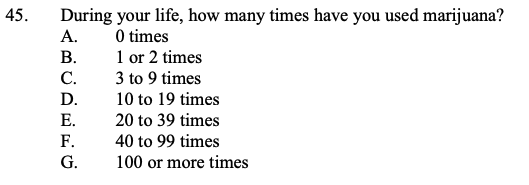

For example, the 2019 Youth Risk Behaviors Survey uses the following question to ask about marijuana use:

By directly asking how many times the respondent has used marijuana – instead of first asking if they have ever used marijuana – the question makes a slight assumption that the respondent has used marijuana. Respondents can still answer 0 times if they have never used marijuana, but the direct frequency approach makes it more likely that respondents report using marijuana.

When using this approach, you have to be careful about the question flow. The Youth Risk Behaviors Survey first asks about cigarette and tobacco use, and then alcohol use before asking about marijuana use. It would be inappropriate to ask people how often they use heroin without first setting up the context.

9. Skew response scale in the opposite direction of the bias

Respondents tend to inflate socially desirable behaviors (how often they exercise) and under report socially undesirable behaviors (how much TV they watch). Adjust for this by slightly skewing the response scale in the opposite direction of the bias. That is, add a few more socially undesirable categories to the end of your scale and put the least desirable response options first.

10. Ask an even more sensitive question before your sensitive question of interest

You can desensitize your respondents by asking a slightly more sensitive question just before your question of interest. For example, if you want to know how often respondents have driven a car while drinking, first ask how often they have driven while drinking and using drugs. Make sure the extra question is not so sensitive that it backfires!

11. Use an indirect approach such as Item Count technique.

The Item Count Technique is a split sample design where a random half of the respondents receive a list of behaviors that are not sensitive and the other half receive the same list of behaviors plus the sensitive behavior of interest. Respondents then provide a total count of all the behaviors they have done, but do NOT indicate which specific behaviors they have done. An example of items included in an item count study conducted by Kuha and Jackson, 2014 is below:

“I am now going to read you a list of five [six] things that people may do or that may happen to them. Please listen to them and tell me how many of them you have done or have happened to you in the last 12 months. Do not tell me which ones are and are not true for you. Just tell me how many you have done at least once.”

- Attended a religious service, except for a special occasion like a wedding or funeral

- Went to a sporting event

- Attended an opera

- Visited a country outside [your country]

- Had personal belongings such as money or a mobile phone stolen from you or from your house?

- Treatment group only: Bought something you thought might have been stolen?

Researchers then compare the totals in the group that received the sensitive item to the totals in the group that did not receive the sensitive item to estimate the proportion of respondents who endorse the sensitive behavior. For this to work well, the control behaviors (non-sensitive behaviors) should include some that have a relatively low prevalence rate. If respondents are likely to endorse all of the behaviors asked about, then the strategy will not work as well.

The downside is that this method only provides estimates for the entire sample and not for individual respondents.

Other indirect techniques include Endorsement and Randomized Response Technique. For a comparison of different indirect methods, see Rosenfield, Imai, and Shapiro (2015).

Free eBook: The Qualtrics Handbook of Question Design

Resources

Tourangeau, R., & Yan, T. (2007). Sensitive questions in surveys. Psychological Bulletin, 133(5): 859-883. DOI: 10.1037/0033-2909.133.5.859

Rosenfeld, B., Imai, K. & Shapiro, J. N. (2015). An empirical validation study of popular survey methodologies for sensitive questions. American Journal of Political Science, 60(3): 783-802. DOI: 10.1111/ajps.12205.